If you’ve spent any time in AL development, you’ve probably reinvented a wheel or two. Written a quick parser for a date string, wrestled with URL encoding for an API call, or built a little helper to compare dates safely. The "Type Helper" Codeunit (ID 10, namespace System.Reflection) exists specifically to handle this kind of utility work—and it’s surprisingly comprehensive.

This post walks through useful procedures grouped by category, with practical AL examples for each.

You can find the full code for the example on GitHub.

What Is the Type Helper Codeunit?

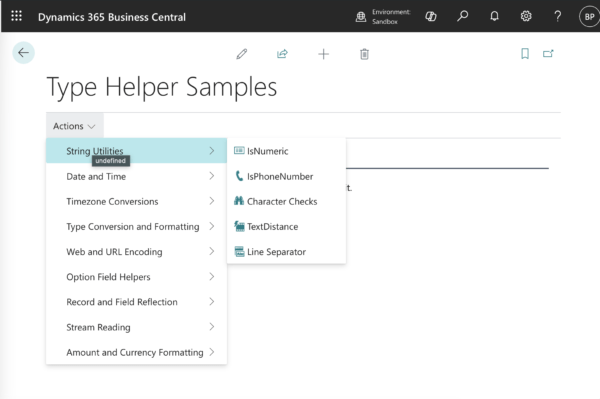

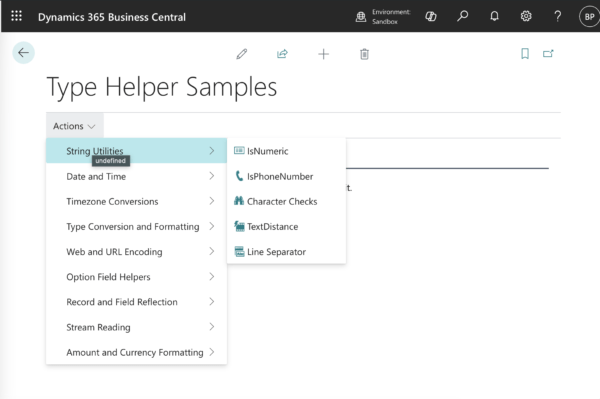

"Type Helper" is a base application Codeunit that provides a broad set of utility methods covering:

- String and character checks

- Date, time, and timezone formatting and conversion

- URL/HTML encoding

- Record and field reflection

- Option field helpers

- Stream reading

- Amount/currency format strings

It’s not flashy, but it saves real time. Before writing your own utility procedure, check here first.

String Utilities

IsNumeric

procedure IsNumeric(Text: Text): Boolean

Returns true if a text value can be parsed as a Decimal.

var

TypeHelper: Codeunit "Type Helper";

Result1, Result2: Boolean;

Value1, Value2: Text;

begin

Value1 := '42.5';

Value2 := 'hello';

Result1 := TypeHelper.IsNumeric(Value1); // true

Result2 := TypeHelper.IsNumeric(Value2); // false

Message('IsNumeric(''' + Value1 + '''): %1\IsNumeric(''' + Value2 + '''): %2', Result1, Result2);

end;

Useful for validating user input or imported data before calling Evaluate.

IsPhoneNumber

procedure IsPhoneNumber(Input: Text): Boolean

Validates that a string contains only digits, spaces, parentheses, dashes, and +. It uses a regex under the hood.

var

TypeHelper: Codeunit "Type Helper";

Value1, Value2: Text;

begin

Value1 := '+1 (555) 123-4567';

Value2 := 'not-a-phone';

Message('IsPhoneNumber(''' + Value1 + '''): %1\IsPhoneNumber(''' + Value2 + '''): %2',

TypeHelper.IsPhoneNumber(Value1), TypeHelper.IsPhoneNumber(Value2));

end;

IsLatinLetter / IsDigit / IsUpper

procedure IsLatinLetter(ch: Char): Boolean

procedure IsDigit(ch: Char): Boolean

procedure IsUpper(ch: Char): Boolean

Character-level checks. Handy when parsing text character by character.

var

TypeHelper: Codeunit "Type Helper";

ch: Char;

begin

ch := 'A';

if TypeHelper.IsLatinLetter(ch) then

Message('It is a letter.');

ch := '3';

if TypeHelper.IsDigit(ch) then

Message('It is a digit.');

end;

TextDistance

procedure TextDistance(Text1: Text; Text2: Text): Integer

Calculates the Levenshtein distance (I didn’t know what this was before digging into this Codeunit) between two strings—the minimum number of single-character edits to get from one string to the other. Useful for fuzzy matching or detecting near-duplicates.

var

TypeHelper: Codeunit "Type Helper";

Distance: Integer;

begin

Distance := TypeHelper.TextDistance('Business', 'Busines'); // 1

Distance := TypeHelper.TextDistance('Hello', 'World'); // 4

end;

Note: Strings are limited to ~1024 characters.

NewLine / CRLFSeparator / LFSeparator

procedure NewLine(): Text

procedure CRLFSeparator(): Text[2]

procedure LFSeparator(): Text[1]

Returns the system newline string, a carriage-return + line-feed pair, or just a line feed. Avoid hardcoding character codes.

var

TypeHelper: Codeunit "Type Helper";

Msg: Text;

begin

Msg := 'Line one' + TypeHelper.CRLFSeparator() + 'Line two';

Message(Msg);

end;

Date and Time

FormatDateWithCurrentCulture

procedure FormatDateWithCurrentCulture(DateToFormat: Date): Text

The simplest way to format a Date using the current user’s locale. Great for displaying dates in messages or reports.

var

TypeHelper: Codeunit "Type Helper";

FormattedDate: Text;

begin

FormattedDate := TypeHelper.FormatDateWithCurrentCulture(WorkDate());

Message(FormattedDate);

end;

FormatDate (overloads)

procedure FormatDate(DateToFormat: Date; LanguageId: Integer): Text

procedure FormatDate(DateToFormat: Date; Format: Text; CultureName: Text): Text

Two overloads let you format with a specific language ID or a custom format string and culture name.

var

TypeHelper: Codeunit "Type Helper";

begin

// Format using a language ID (e.g., 1033 = en-US)

Message(TypeHelper.FormatDate(WorkDate(), 1033));

// Format using a .NET format string and culture name

Message(TypeHelper.FormatDate(WorkDate(), 'MMMM dd, yyyy', 'en-US'));

end;

CompareDateTime

procedure CompareDateTime(DateTimeA: DateTime; DateTimeB: DateTime): Integer

Compares two DateTime values with a built-in millisecond-level tolerance that accounts for SQL precision rounding. Returns 1, 0, or -1.

var

TypeHelper: Codeunit "Type Helper";

StartDateTime: DateTime;

EndDateTime: DateTime;

Result: Integer;

begin

StartDateTime := CreateDateTime(WorkDate(), 080000T);

EndDateTime := CreateDateTime(WorkDate(), 170000T);

Result := TypeHelper.CompareDateTime(StartDateTime, EndDateTime);

Message('CompareDateTime result: %1 (negative means first is earlier)', Result);

end;

Don’t compare DateTime values directly with = when they come from the database—use this instead.

AddHoursToDateTime

procedure AddHoursToDateTime(SourceDateTime: DateTime; NoOfHours: Integer): DateTime

Adds a number of hours to a DateTime value.

var

TypeHelper: Codeunit "Type Helper";

NewDT: DateTime;

begin

NewDT := TypeHelper.AddHoursToDateTime(CurrentDateTime, 8);

end;

GetHMSFromTime

procedure GetHMSFromTime(var Hour: Integer; var Minute: Integer; var Second: Integer; TimeSource: Time)

Breaks a Time value into its hour, minute, and second components.

var

TypeHelper: Codeunit "Type Helper";

H: Integer;

M: Integer;

S: Integer;

CurrentTime: Time;

begin

CurrentTime := Time;

TypeHelper.GetHMSFromTime(H, M, S, CurrentTime);

Message('Current time -> H:%1 M:%2 S:%3', H, M, S);

end;

EvaluateUnixTimestamp

procedure EvaluateUnixTimestamp(Timestamp: BigInteger): DateTime

Converts a Unix epoch timestamp (BigInteger, seconds since Jan 1 1970) to a DateTime, adjusting for the user’s timezone offset.

var

TypeHelper: Codeunit "Type Helper";

ResultDT: DateTime;

TimeStamp: BigInteger;

begin

TimeStamp := 1740657600;

ResultDT := TypeHelper.EvaluateUnixTimestamp(TimeStamp);

Message('EvaluateUnixTimestamp(' + Format(TimeStamp, 9) + '): %1', ResultDT);

end;

GetCurrUTCDateTime / GetCurrUTCDateTimeAsText / GetCurrUTCDateTimeISO8601

procedure GetCurrUTCDateTime(): DateTime

procedure GetCurrUTCDateTimeAsText(): Text

procedure GetCurrUTCDateTimeISO8601(): Text

Three shortcuts for getting the current UTC time in different formats.

var

TypeHelper: Codeunit "Type Helper";

begin

// DateTime value

MyDT := TypeHelper.GetCurrUTCDateTime();

// RFC 1123 text: "Thu, 27 Feb 2026 14:30:00 GMT"

MyText := TypeHelper.GetCurrUTCDateTimeAsText();

// ISO 8601: "2026-02-27T14:30:00Z"

MyText := TypeHelper.GetCurrUTCDateTimeISO8601();

end;

GetCurrUTCDateTimeISO8601 is especially useful when building API payloads.

Timezone Conversions

GetUserTimezoneOffset

procedure GetUserTimezoneOffset(var Duration: Duration): Boolean

Returns the current user’s UTC offset as a Duration. Returns false if the user has no timezone set.

var

TypeHelper: Codeunit "Type Helper";

Offset: Duration;

begin

if TypeHelper.GetUserTimezoneOffset(Offset) then

Message('Offset in ms: %1', Offset);

end;

Type Conversion and Formatting

Evaluate

procedure Evaluate(var Variable: Variant; String: Text; Format: Text; CultureName: Text): Boolean

The general-purpose type evaluator. Pass in a Variant of the target type, a text string, an optional format, and an optional culture name.

var

TypeHelper: Codeunit "Type Helper";

Value: Variant;

ParsedDate: Date;

Ok: Boolean;

begin

ParsedDate := 0D;

Value := ParsedDate;

Ok := TypeHelper.Evaluate(Value, '02/27/2026', 'MM/dd/yyyy', 'en-US');

if Ok then

ParsedDate := Value;

end;

FormatDecimal

procedure FormatDecimal(Decimal: Decimal; DataFormat: Text; DataFormattingCulture: Text): Text

Formats a Decimal with a .NET format string and culture name. Useful for building API payloads or localized output.

var

TypeHelper: Codeunit "Type Helper";

Formatted: Text;

begin

Formatted := TypeHelper.FormatDecimal(1234.56, 'N2', 'en-US'); // "1,234.56"

Formatted := TypeHelper.FormatDecimal(1234.56, 'N2', 'de-DE'); // "1.234,56"

end;

IntToHex

procedure IntToHex(IntValue: Integer): Text

Converts an integer to its hexadecimal representation.

var

TypeHelper: Codeunit "Type Helper";

begin

Message(TypeHelper.IntToHex(255)); // "FF"

Message(TypeHelper.IntToHex(4096)); // "1000"

end;

Maximum / Minimum

procedure Maximum(Value1: Decimal; Value2: Decimal): Decimal

procedure Minimum(Value1: Decimal; Value2: Decimal): Decimal

Returns the larger or smaller of two Decimal values.

var

TypeHelper: Codeunit "Type Helper";

begin

Message('%1', TypeHelper.Maximum(10.0, 25.5)); // 25.5

Message('%1', TypeHelper.Minimum(10.0, 25.5)); // 10

end;

Web and URL Encoding

These are essential any time you’re calling external APIs or generating web links in AL.

UrlEncode / UrlDecode

procedure UrlEncode(var Value: Text): Text

procedure UrlEncode(var Value: SecretText): SecretText

procedure UrlDecode(var Value: Text): Text

var

TypeHelper: Codeunit "Type Helper";

Encoded: Text;

begin

Encoded := 'search term with spaces';

TypeHelper.UrlEncode(Encoded);

Message(Encoded); // "search+term+with+spaces"

end;

There’s also an overload that accepts and returns SecretText for encoding credentials without exposing the raw value.

HtmlEncode / HtmlDecode

procedure HtmlEncode(var Value: Text): Text

procedure HtmlDecode(var Value: Text): Text

Encodes characters like <, >, & for safe HTML output, or decodes them back.

var

TypeHelper: Codeunit "Type Helper";

SafeHtml: Text;

begin

SafeHtml := '<script>alert("xss")</script>';

TypeHelper.HtmlEncode(SafeHtml);

Message(SafeHtml); // "<script>alert("xss")</script>"

end;

UriEscapeDataString / UriGetAuthority

procedure UriEscapeDataString(Value: Text): Text

procedure UriGetAuthority(Value: Text): Text

UriEscapeDataString percent-encodes a value (RFC 3986). UriGetAuthority extracts the scheme + host from a URL.

var

TypeHelper: Codeunit "Type Helper";

begin

Message(TypeHelper.UriEscapeDataString('hello world')); // "hello%20world"

Message(TypeHelper.UriGetAuthority('https://api.example.com/v1/items'));

// "https://api.example.com"

end;

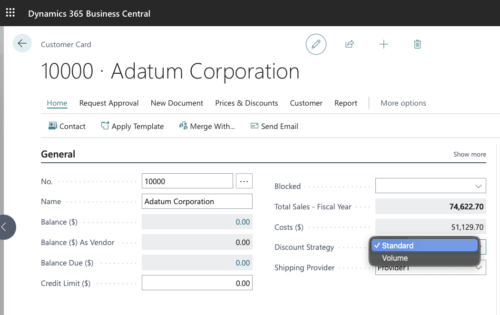

Option Field Helpers

GetOptionNo

procedure GetOptionNo(Value: Text; OptionString: Text): Integer

Looks up the integer index of an option value string within an option set string. Returns -1 if not found.

var

TypeHelper: Codeunit "Type Helper";

Idx: Integer;

begin

Idx := TypeHelper.GetOptionNo('Posted', 'Open,Released,Posted,Canceled');

// Returns 2

end;

GetNumberOfOptions

procedure GetNumberOfOptions(OptionString: Text): Integer

Counts how many comma-separated options are in an option string.

var

TypeHelper: Codeunit "Type Helper";

begin

Message('%1', TypeHelper.GetNumberOfOptions('Open,Released,Posted')); // 2 (counts commas)

end;

Watch out: this counts commas, so it returns n - 1 for n options. Adjust accordingly.

Record and Field Reflection

GetField / GetFieldLength

procedure GetField(TableNo: Integer; FieldNo: Integer; var Field: Record Field): Boolean

procedure GetFieldLength(TableNo: Integer; FieldNo: Integer): Integer

GetField retrieves field metadata from the Field virtual table and returns false if the field doesn’t exist or is obsolete (ObsoleteState = Removed). GetFieldLength returns the declared length of a text field.

var

TypeHelper: Codeunit "Type Helper";

FieldRec: Record Field;

begin

if TypeHelper.GetField(Database::Customer, Customer.FieldNo(Name), FieldRec) then

Message('Max length: %1', TypeHelper.GetFieldLength(Database::Customer, Customer.FieldNo(Name)));

end;

SortRecordRef

procedure SortRecordRef(var RecRef: RecordRef; CommaSeparatedFieldsToSort: Text; Ascending: Boolean)

Applies a sort order to a RecordRef using a comma-separated list of field names.

var

TypeHelper: Codeunit "Type Helper";

RecRef: RecordRef;

begin

RecRef.Open(Database::Customer);

TypeHelper.SortRecordRef(RecRef, '"No."', true); // ascending by No.

if RecRef.FindSet() then

repeat

// ...

until RecRef.Next() = 0;

end;

GetKeyAsString

procedure GetKeyAsString(RecordVariant: Variant; KeyIndex: Integer): Text

Returns the key fields of a record as a comma-separated string. Useful for logging or telemetry.

var

Cust: Record Customer;

TypeHelper: Codeunit "Type Helper";

KeyText: Text;

begin

if Cust.FindFirst() then begin

KeyText := TypeHelper.GetKeyAsString(Cust, 1);

Message('GetKeyAsString: %1', KeyText);

end;

end;

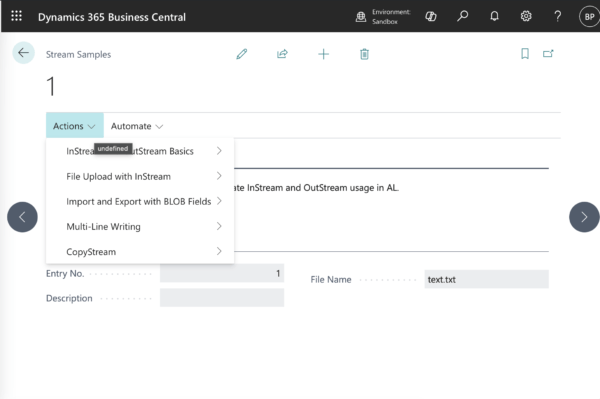

Stream Reading

ReadAsTextWithSeparator / TryReadAsTextWithSeparator

procedure ReadAsTextWithSeparator(InStream: InStream; LineSeparator: Text): Text

[TryFunction]

procedure TryReadAsTextWithSeparator(InStream: InStream; LineSeparator: Text; var Content: Text): Boolean

Reads an InStream into a Text value, using a specified line separator. TryReadAsTextWithSeparator wraps the call in a [TryFunction] for error-safe reading.

var

TempBlob: Codeunit "Temp Blob";

TypeHelper: Codeunit "Type Helper";

FileContent: Text;

IStream: InStream;

OStream: OutStream;

begin

TempBlob.CreateOutStream(OStream);

OStream.WriteText('Line 1');

OStream.WriteText(TypeHelper.CRLFSeparator());

OStream.WriteText('Line 2');

OStream.WriteText(TypeHelper.CRLFSeparator());

OStream.WriteText('Line 3');

TempBlob.CreateInStream(IStream);

FileContent := TypeHelper.ReadAsTextWithSeparator(IStream, TypeHelper.CRLFSeparator());

Message('ReadAsTextWithSeparator:\%1', FileContent);

end;

Amount and Currency Formatting

GetAmountFormatLCYWithUserLocale

procedure GetAmountFormatLCYWithUserLocale(): Text

procedure GetAmountFormatLCYWithUserLocale(DecimalPlaces: Integer): Text

Returns a BC format string for displaying amounts in the local currency, formatted for the current user’s locale. Pass an optional decimal places count.

var

TypeHelper: Codeunit "Type Helper";

AmtFormat: Text;

begin

AmtFormat := TypeHelper.GetAmountFormatLCYWithUserLocale();

Message(Format(1234.56, 0, AmtFormat));

end;

GetXMLAmountFormatWithTwoDecimalPlaces / GetXMLDateFormat

procedure GetXMLAmountFormatWithTwoDecimalPlaces(): Text

procedure GetXMLDateFormat(): Text

Return BC format strings suitable for XML output—two decimal places for amounts and the standard XML date format.

var

TypeHelper: Codeunit "Type Helper";

begin

Message(Format(Today, 0, TypeHelper.GetXMLDateFormat())); // e.g., "2026-02-27"

Message(Format(12345.6, 0, TypeHelper.GetXMLAmountFormatWithTwoDecimalPlaces())); // "12345.60"

end;

Wrapping Up

The "Type Helper" codeunit is one of the most useful utility libraries in BC’s base application—and one of the most underused. Before writing your own string parser, encoding helper, or date workaround, it’s worth skimming the procedure list. There’s a good chance something here already does exactly what you need.

A few things to keep in mind:

LanguageIDToCultureName and GetCultureName are obsolete as of v26—Use Codeunit 43 Language instead.UrlEncodeSecret is obsolete as of v27—Use the SecretText overload of UrlEncode instead.

Learn more: Codeunit “Type Helper” – Microsoft Learn

Note: The code and information discussed in this article are for informational and demonstration purposes only. Always test in a sandbox environment before deploying to production. This content was written referencing Microsoft Dynamics 365 Business Central 2025 Wave 2.

available.

available.